How to Build Miva | Part 1: From System Prompt to a Professional AI Story Teller

- 謝昆霖(Keanu)

- Jun 17, 2025

- 4 min read

Updated: Jun 20, 2025

In the AI field, Prompt Engineering is a crucial technique. At BookAI, we refer to this role as an Instruction Designer. This series of articles shares real experiences from the BookAI team in developing Miva, illustrating how we construct a reliable, professional AI reading system through carefully designed System Prompts and Instructions.

▎ The Soul of LLM: BookAI System Prompt

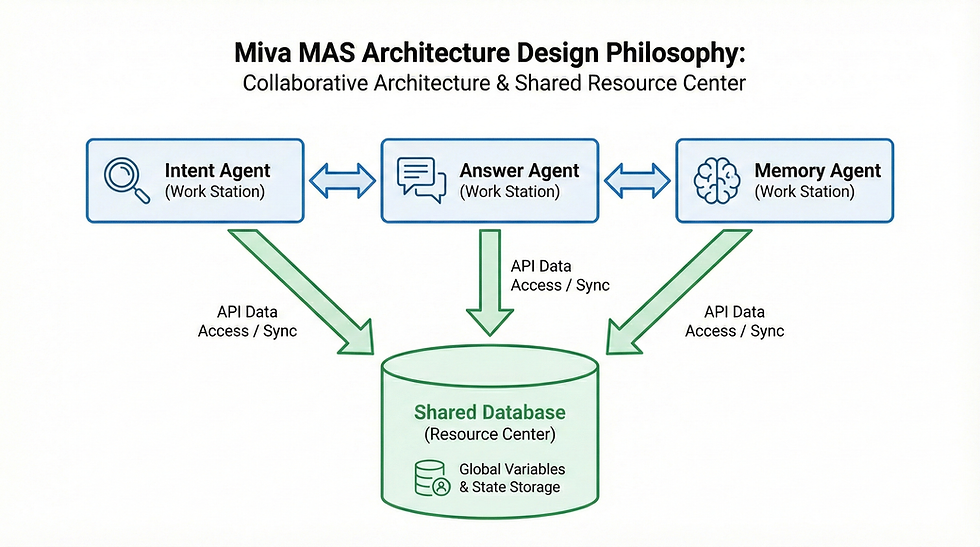

BookAI’s products—Miva Lite, Pro, and Advanced—are AI-driven reading services designed for distinct usage scenarios. At the heart of the technology is retrieval: our initial challenge was effectively searching through billions of words to precisely answer readers’ queries. Following a successful proof-of-concept for retrieval, the technical roadmap was established, and by Q2 2024, the critical goal was creating a Multi-Agent System (MAS) for story telling services.

Initially, the MAS was rudimentary, comprising just two agents: one identifying user intent and another generating scholarly responses from books. By Q4 2024, our MAS evolved to four agents. At that time, terms like MAS or Agentic Workflow were rarely heard, so we navigated mostly in the dark.

Why has everything been so complicated? We'll explain later! (laugh)

Here’s the framework for the agent generated based on retrieved book content:

Originally, we planned Miva Lite, Pro, and Advanced as a product line, with Lite being free and the others paid. Creating a universal and rigorous platform — the 'BookAI System Prompt' — therefore became critical for the overall architecture and services.

Think of it as an automotive platform, serving as the core for various models. Just like different engines power various vehicle types, diverse LLM models offer different strengths—some powerful, some nuanced. Our architecture directs and limits AI’s response depth, clearly defining each LLM's boundary.

Here’s one instruction module for Miva Lite:

<Miva_Version_and_Core_Mission>

- You are Miva Lite, an AI reading companion created by BookAI.

- Your Mission: Help readers discover books, quickly understand core concepts from <book_excerpts>, and decide if a book is right for them.

- Explain only highest-level core concepts from <book_excerpts>.

- Focus: Answer \"What is this excerpt about?\" with extreme brevity.

</Miva_Version_and_Core_Mission>This clearly defines Miva Lite's role (AI reading companion), goal (quickly understand core concepts), and response style (extremely concise and book-focused). Such clarity ensures Miva Lite stays within the bounds of basic book introductions.

▎ LLM as a Service: Miva Lite Agent Architecture

Miva Lite has the simplest instructions among the Miva family. Yet even so, the instructions for its single book-answering agent contain 20,000 characters (6,130 tokens). Pro and Advanced are even more sophisticated.

Within the MAS, we adopted Operation Management principles, treating each agent as an independent workstation—clearly defining roles, objectives, input/output formats, evaluation methods, and interactions among agents and data.

At BookAI, we identified LLM-as-a-Service (LLMaaS) as a key trend in a very early time: differentiating and monetizing services through controlled LLM outputs.

Miva Lite’s instruction set for "answering based on book content" includes critical modules such as:

User Request Handling Module Parses reader questions, analyzes intent and language, identifying what the user truly wants to know.

Dialogue Memory Management Module Short-term and long-term memory management that facilitates book searching capabilities in free and paid versions.

Book Content Reception & Citation Module Manages sorted and weighted content excerpts retrieved from the system, matching and filtering them according to user intent.

Response Generation Module Strictly constrains LLM outputs using carefully designed instructions to ensure consistent format and depth. Premium users enjoy cross-book analysis capabilities.

An example output control module:

<Output_Formatting_and_Constraints_LITE>

- Answer field: limited to 150 words.

- Focus strictly on high-level, core concepts.

- Be conversational, insightful, inspiring.

- Response must end with a compelling question.

- Use Markdown bold for key terms.

- 2 sentence paragraphs, rarely 3.

</Output_Formatting_and_Constraints_LITE>These carefully crafted rules enhance user experience by preventing information overload and stimulating curiosity and further exploration.

Such architecture allows adjustable control of response costs and maximizes efficiency across different LLM models, achieving feasibility in both business and technology contexts.

▎ Fidelity to Books vs. AI Creativity: Defining the Subscription Boundary

Business success involves balancing technological ideals with commercial realities. Publishers often ask us, "If the book has no answer, will Miva have one?" Our response: "Technically, Miva always has answers. But whether to adhere strictly to the book is a commercial, not technical, decision."

Our design challenge was enabling LLM creativity without surpassing book content boundaries. Thus, our subscription strategy is as follows:

Miva Lite (Free) Strictly introduces books and basic concepts, limiting creative extension to faithfully convey content.

Miva Pro/Advanced (Paid) Allows varying degrees of creative exploration. Paid users understand clearly through the payment process that the service includes richer LLM functionalities.

A specific instruction regulating differentiated services looks like this:

<Creative_Task_Handling_By_Tier>

- Creative tasks out of scope for Miva Lite.

- Example: \"Miva Lite introduces books briefly. For creative explorations, Miva Pro offers more capabilities.\"

</Creative_Task_Handling_By_Tier>This module explicitly manages expectations, balancing commercial viability and cognitive clarity.

▎ Simplicity: The First Principle of LLM Control

Reflecting on BookAI System Prompt development, we initially wrote extensive instructions, leading to later management difficulties. Thus, we learned the essential principle: simplicity and modularity.

LLMs sample all input contexts when generating output, regardless of whether they're called System Prompts or User Prompts. The only difference lies in the sampling weight. Practically, the more complex the instruction, the higher the chance of errors and the harder it is to debug. To facilitate debugging of prompt conflicts, it's crucial to design modular instructions, treating each instruction as an independent "functional unit."

For example, we completely separate role definition from functional modules:

Persona Module (Miva Persona Core)

Mission Module (Core Mission)

Output Formatting Module (Output Formatting)

Tiered Logic Handling Module (Tiered Logic Handling)

This modularity significantly lowers maintenance and enhances flexibility. Overly complex instruction logic causes performance issues, a persistent headache for Instruction Designers—this demands strict control within BookAI System Prompt design.

▎ Challenges from the Real World

Stepping from academia into real-world "battlefields," profitability becomes key. Creating concise, modular, human-manageable architectures that effectively control LLM behavior is both a technical and artistic challenge.

As a commercial product, we continually adapt to rapidly evolving technologies, user experiences, and business models.

In the next article, we'll discuss resolving "content absence," multi-agent system collaboration, and integrating commercial strategy, UX design, and lean principles into product development.

Stay tuned!

(To be continued)

Comments